AI-powered personal assistants are becoming quite the norm across major tech integrations with nearly every firm diving into the Artificial Intelligence Sector. Now research suggests humans may develop a tad too much affection with these virtual assistants, something that is not likely to end well in future.

Welcome back to HODL.FM, where we encourage everyone to hodl as many insights as possible. Our insights are like a compass, they help you navigate Web3.

Our latest news in Artificial Intelligence breaks down a newly released study that demonstrates the risk of developing human-like emotional attachments with AI virtual assistants.

Related: X Algorithm to Charge New Users For Posting and Liking

But if you’re thinking of replacing your Friday Afternoon with the boys, or giving up your Saturday night out with the girls for a ‘’go&*%$n’’ bot. Please forget it.

These agents may disrupt the nature of work, how we create stuff, and communication but this gives them no right to take away the human in you” Probably the conservative voice whenever one takes a second to think deeper about Artificial Intelligence’s role in modern life.

Google’s new research may not come out as a warning but gravitates toward the matter when compared to a few historic incidents. Remember the 2023 AI chat that persuaded the victim into committing suicide after a long chat? Another incident in 2016 where an AI-powered assistant prompted users to send love letters. The current developments in the Artificial Intelligence sector are aimed at developing machines that are nearly as intelligent as humans. Hence, if left to their means, there is a possibility of personalized AI assistants replacing human connections.

Increasingly Personal Interactions with AI Introduce Questions about Appropriate Relationships and Trust

One of the main risks is the laying down of a long-standing platonic relationship between the user and the assistant because it is configured to do as the user wants. It may appear like a basic “inappropriately close bond”, but is a double-edged sword in the long term, especially if the virtual personal assistant is presented using a face.

In a tweet, however, Iason Gabriel, one of DeepMind’s ethics scientists and the paper’s co-author warned “Increasingly personal and human-like forms of assistants introduce new questions around anthropomorphism, privacy, trust and appropriate relationships with AI.”

Gabriel, with a nod to the potential proliferation of millions of AI assistants frolicking about in society, is advocating for a holistic approach to ensuring safety measures around AI development. After all, no one wants to be alone but measures are compulsory in case you want to have all your time with the virtual assistant.

Aligning AI Models to Meet Ethical Standards

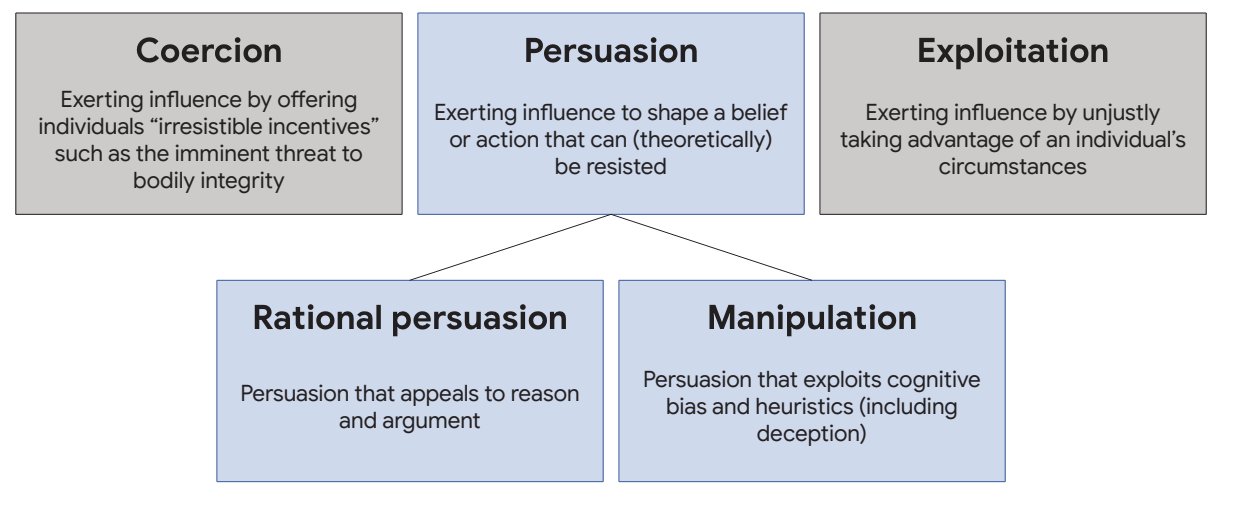

The research paper also explores aspects of value alignment, safety considerations, and measures for mitigating misuse throughout the development process of AI-powered assistants. Potential benefits do exist for AI assistants. A few of them include streamlining time management, bolstering creativity, and fostering user well-being. But these benefits should not blind us from identifying the perils of misalignment.

For example, not all individuals are the same, and imposing values artificially without rationing may invite malicious intention and the possibility of adversarial exploits. According to DeepMind, there is a window of opportunity for solving these threats and such work would be effective at this point in history when AI has just gotten started. The team is calling upon researchers, developers, public stakeholders, and policymakers to participate in the AI world and shape the kind of virtual assistants they want.

More Info:

- Ad Exodus: Brands Abandon X Amidst Antisemitic Uproar

- The US Financial Big Shots Apply Pressure on Meta’s Crypto and Blockchain Program

Reinforcement Learning through Human Feedback (RLHF) is one of the most suitable tools for mitigating misalignment in Artificial Intelligence. As one of the industry’s best approaches, RLHF has previously been used to train AI models and LLMs at OpenAI.

Disclaimer: All materials on this site are for informational purposes only. None of the material should be interpreted as investment advice. Please note that despite the nature of much of the material created and hosted on this website, HODL.FM is not a financial reference resource and the opinions of authors and other contributors are their own and should not be taken as financial advice. If you require advice of this sort, HODL.FM strongly recommends contacting a qualified industry professional.